Copyright © 2014 Géza Horváth, Benedek Nagy, University of Debrecen

Table of Contents

- Formal Languages and Automata Theory

- Introduction

- 1. Elements of Formal Languages

- 2. Regular Languages and Finite Automata

- 3. Linear Languages

- 4. Context-free Languages

- 5. Context-Sensitive Languages

- 6. Recursively Enumerable Languages and Turing Machines

- 7. Literature

List of Figures

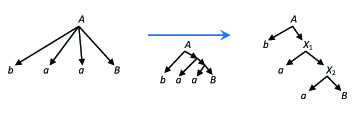

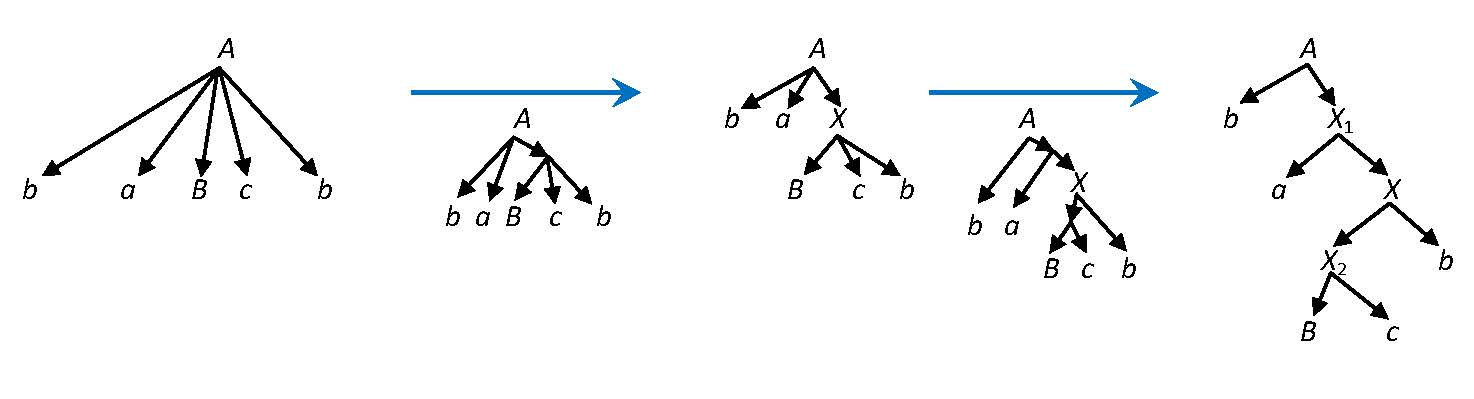

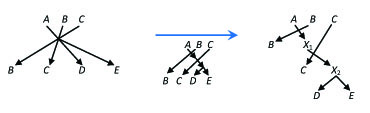

- 2.1. In derivations the rules with long right hand side are replaced by chains of shorter rules, resulting binary derivation trees in the new grammar.

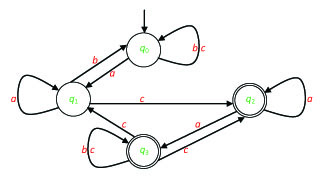

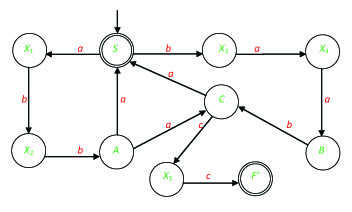

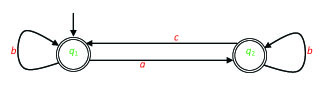

- 2.2. The graph of the automaton of Example 21.

- 2.3. The graph of the automaton of Exercise 19.

- 2.4. The graph of the automaton of Exercise 22.

- 2.5. The graph of the automaton of Exercise 25.

- 2.6. The graph of the automaton of Example 26.

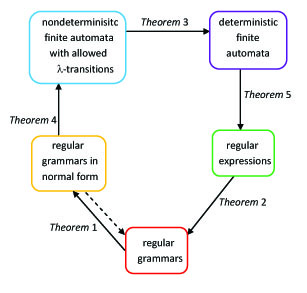

- 2.7. The equivalence of the three types of descriptions (type-3 grammars, regular expressions and finite automata) of the regular languages.

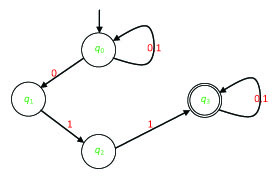

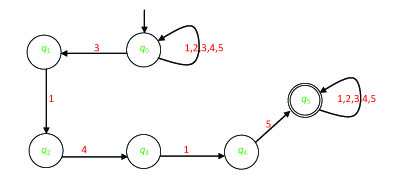

- 2.8. The graph of the automaton of Exercise 31.

- 2.9. The graph of the automaton of Exercise 32.

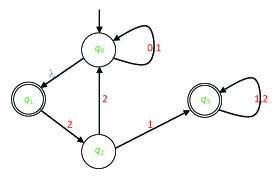

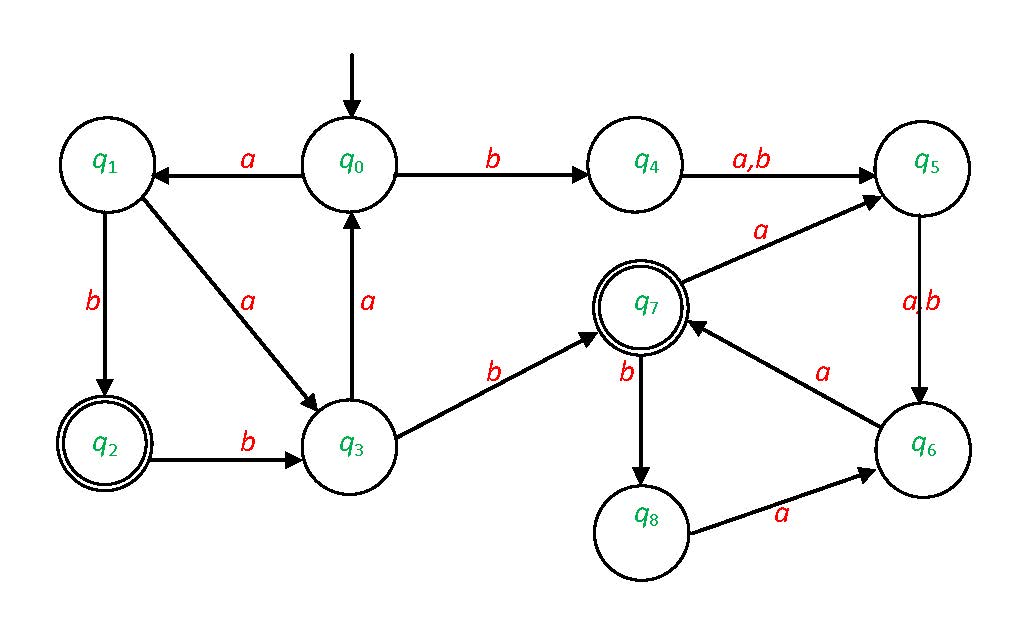

- 2.10. The graph of the automaton of Exercise 37.

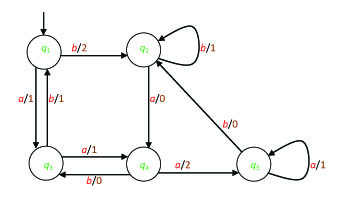

- 2.11. The graph of the Mealy automaton of Example 30.

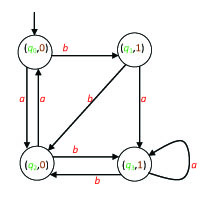

- 2.12. The graph of the Mealy automaton of Exercise 42.

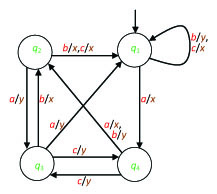

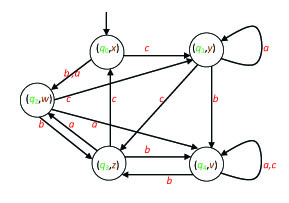

- 2.13. The graph of the Mealy automaton of Exercise 33.

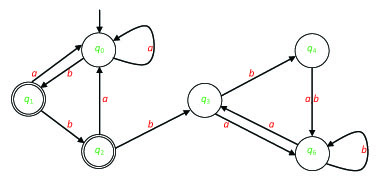

- 2.14. The graph of the Mealy automaton of Exercise 46.

- 3.1. In derivations the rules with long right hand side (left) are replaced by chains of shorter rules in two steps, causing a binary derivation tree in the resulting grammar (right).

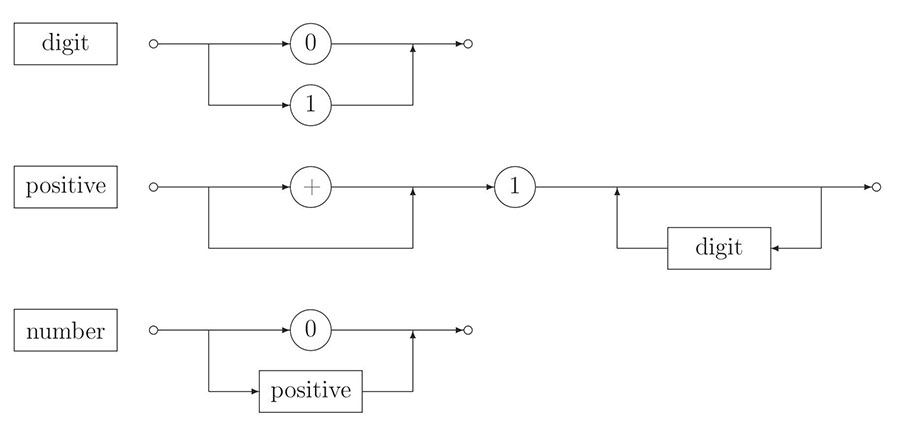

- 4.1. Syntax diagram.

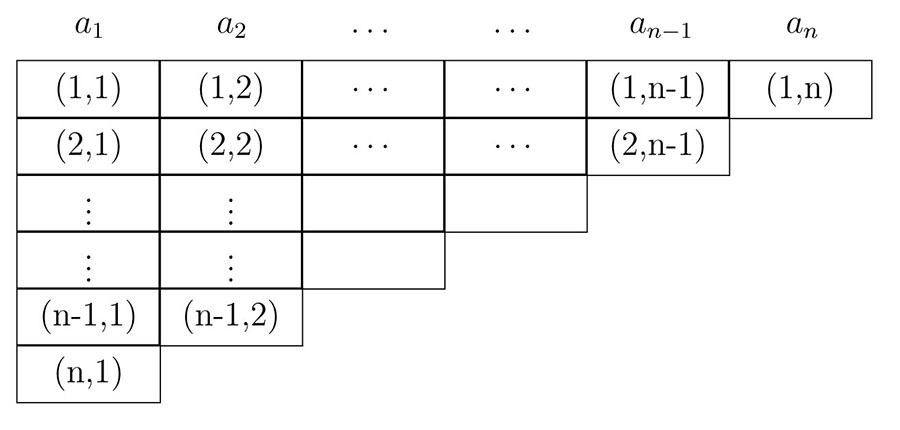

- 4.2. The triangular matrix M for the CYK algorithm.

- 4.3. The triangular matrix M for the CYK algorithm of the Example 45.

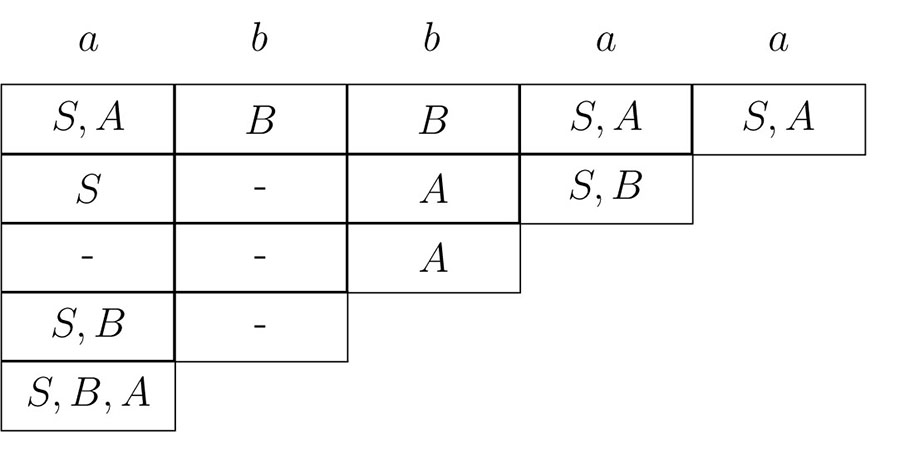

- 4.4. The triangular matrix M for the Earley algorithm.

- 4.5. The triangular matrix M for the Earley algorithm of the example 46.

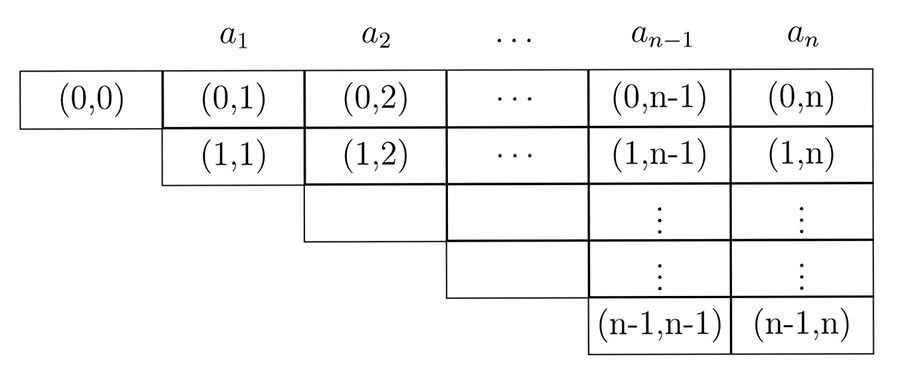

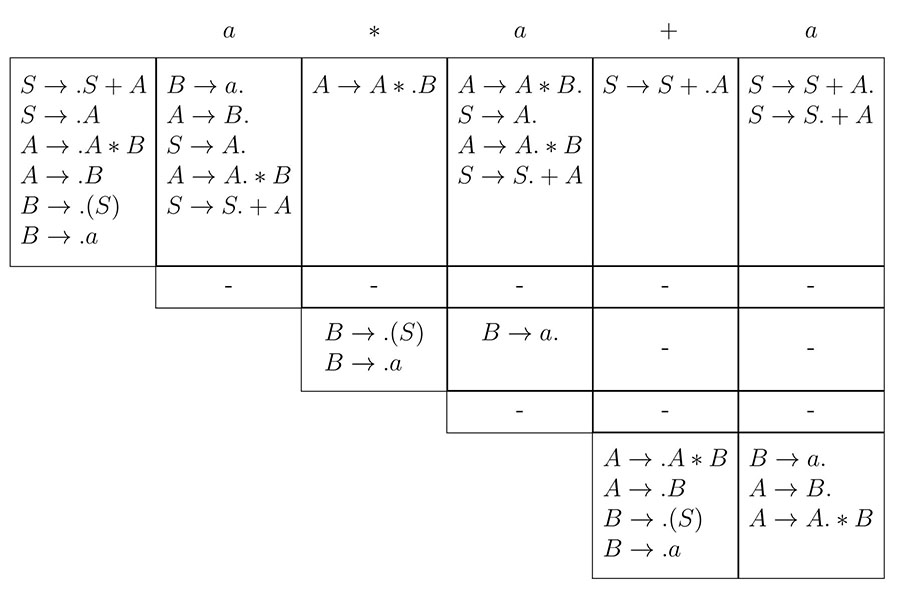

- 4.6. The graphical notation for the example 47.

- 4.7. The graphical notation for the example 48.

- 4.8. The graphical notation for the example 49.

- 4.9. The graphical notation for the example 50.

- 5.1. In derivations the rules with long right hand side are replaced by chains of shorter rules.

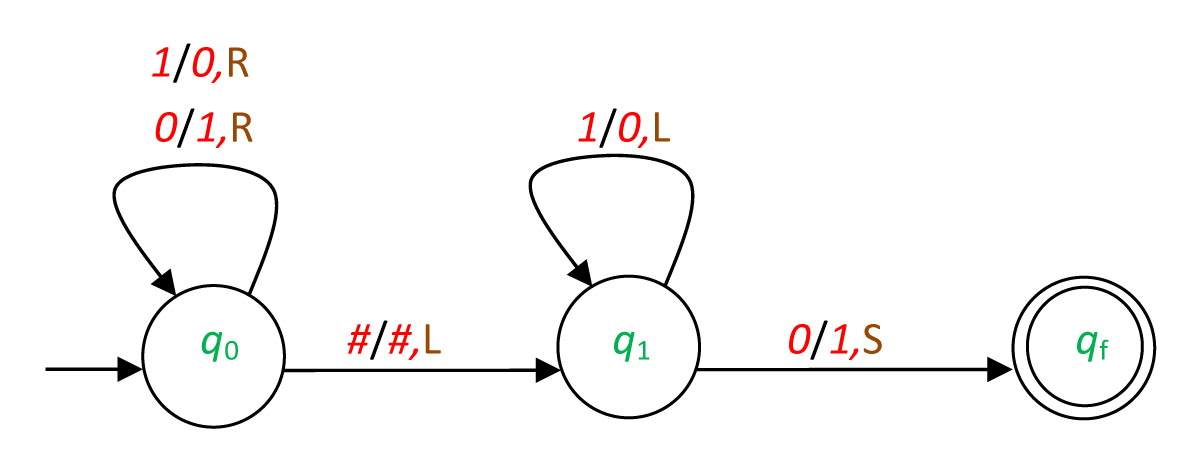

- 6.1. The graphical notation for the Example 58.

List of Tables

Géza Horváth, Benedek Nagy

University of Debrecen, 2014

© Géza Horváth, Benedek Nagy

Typotex Publishing, www.typotex.hu

ISBN: 978-963-279-344-3

Creative Commons NonCommercial-NoDerivs 3.0 (CC BY-NC-ND 3.0) A szerző nevének feltüntetése mellett nem kereskedelmi céllal szabadon másolható, terjeszthető, megjelentethető és előadható, de nem módosítható.

Készült a TÁMOP-4.1.2.A/1-11/1-2011-0063 azonosítószámú „Sokprocesszoros rendszerek a mérnöki gyakorlatban” című projekt keretében.

Formal Languages and Automata Theory are one of the most important base fields of (Theoretical) Computer Science. They are rooted in the middle of the last century, and these theories find important applications in other fields of Computer Science and Information Technology, such as, Compiler Technologies, at Operating Systems, ... Although most of the classical results are from the last century, there are some new developments connected to various fields.

The authors of this book have been teaching Formal Languages and Automata Theory for 20 years. This book gives an introduction to these fields. It contains the most essential parts of these theories with lots of examples and exercises. In the book, after discussing some of the most important basic definitions, we start from the smallest and simplest class of the Chomsky hierarchy. This class, the class of regular languages, is well known and has several applications; it is accepted by the class of finite automata. However there are some important languages that are not regular. Therefore we continue with the classes of linear and context-free languages. These classes have also a wide range of applications, and they are accepted by various families of pushdown automata. Finally, the largest classes of the hierarchy, the families of context-sensitive and recursively enumerable languages are presented. These classes are accepted by various families of Turing machines. At the end of the book we give some further literature for those who want to study these fields more deeply and/or interested to newer developments.

The comments of the lector and some other colleagues are gratefully acknowledged.

Debrecen, 2014.

Géza Horváth and Benedek Nagy

Summary of the chapter: In this chapter, we discuss the basic expressions, notations, definitions and theorems of the scientific field of formal languages and automata theory. In the first part of this chapter, we introduce the alphabet, the word, the language and the operations over them. In the second part, we show general rewriting systems and a way to define algorithms by rewriting systems, namely Markov (normal) algorithms. In the third part, we describe a universal method to define a language by a generative grammar. Finally, in the last part of this chapter we show the Chomsky hierarchy: a classification of generative grammars are based on the form of their production rules, and the classification of formal languages are based on the classification of generative grammars generating them.

An alphabet is a finite nonempty set of symbols. We can use the union, the intersection and the relative complement set operations over the alphabet. The absolute complement operation can be used if a universe set is defined.

Example 1. Let the alphabet E be the English alphabet, the alphabet V contains the vowels,

and the alphabet C is the set of the consonants.

Then V∪C=E, V∩C=∅ and

A word is a finite sequence of symbols of the alphabet. The length of the word is the number of symbols it is composed of, with repetitions. Two words are equal if they have the same length and they have the same letter in each position. This might sound trivial, but let us see the following example:

Example 2. Let the alphabet V={1,2,+} and the words p=1+1, q=2. In this case 1+1≠ 2, i.e. p≠q, because these two words have different lengths, and also different letters in the first position.

There is a special operation on words called concatenation, this is the operation of joining two words end to end.

Example 3. Let the alphabet E be the English alphabet. Let the word p=railway, and the word q=station over the alphabet E. Then, the concatenation of p and q is p· q=railwaystation. The length of p is ∣p∣=7 and the length of q is ∣q∣=7, as well.

If there is no danger of confusion we can omit the dot from between the parameters of the concatenation operation. It is obvious that the equation ∣pq∣=∣p∣+∣q∣ holds for all words p and q. There is a special word called an empty word, whose length is 0 and denoted by λ. The empty word is the identity element of the concatenation operation, λp=pλ=p holds for each word p over any alphabet. The word u is a prefix of the word p if there exists a word w such that p=uw, and the word w is a suffix of the word p if there exists a word u such that p=uw. The word v is a subword of word p if there exists words u,w such that p=uvw. The word u is a proper prefix of the word p, if it is a prefix of p, and the following properties hold: u≠λ and u≠p. The word w is a proper suffix of the word p, if it is a suffix of p, and w≠λ, w≠ p. The word v is a proper subword of the word p, if it is a subword of p, and v≠λ, v≠p. As you can see, both the prefixes and suffixes are subwords, and both the proper prefixes and proper suffixes are proper subwords, as well.

Example 4. Let the alphabet E be the English alphabet. Let the word p=railwaystation, and the words u=rail, v=way and w=station. In this example, the word u is a prefix, the word w is a suffix and the word v is a subword of the word p. However, the word uv is also a prefix of the word p, and it is a subword of the word p, as well. The word uvw is a suffix of the word p, but it is not a proper suffix.

We can use the exponentiation operation on a word in a classical way, as well. p0=λ by definition,

p1=p,

and pi=pi-1p,

for each integer i ≥ 2.

The union of pi for each integer i ≥ 0 is denoted by p*,

and the union of pi for each integer i ≥ 1 is denoted

by p+.

These operations are called Kleene star and Kleene plus operations.

p*=p+∪{λ} holds for each word p,

and if p≠λ

then p+=p* \ {λ}.

We can also use the Kleene star and Kleene plus operations on an alphabet. For an alphabet V we denote the set of all words

over the alphabet by V *, and the set of all words, but the empty word by V +.

These operations are called Kleene star and Kleene plus operations.

p*=p+∪{λ} holds for each word p,

and if p≠λ

then p+=p* \ {λ}.

We can also use the Kleene star and Kleene plus operations on an alphabet. For an alphabet V we denote the set of all words

over the alphabet by V *, and the set of all words, but the empty word by V +.

A language over an alphabet is not necessarily a finite set of words, and it is usually denoted by L.

For a given alphabet V the language L over V is

L ⊆ V *. We have a set again, so we can use the classical

set operations, union, intersection, and the complement operation, if the operands are defined over the same alphabet.

For the absolute complement operation we use V * for universe, so  We can also use the concatenation operation. The concatenation of the languages L1 and

L2 contains

all words pq where p ∈ L1 and q ∈

L2. The exponentiation operation is defined in a classical way,

as well. L0={λ} by definition, L1=L,

and Li=Li-1· L,

for each integer i ≥ 2.

The language L 0 contains exactly one word, the empty word. The empty language does not contain any words,

Le=∅, and L 0 ≠ Le.

We can also use the Kleene star and

Kleene plus closure for languages.

We can also use the concatenation operation. The concatenation of the languages L1 and

L2 contains

all words pq where p ∈ L1 and q ∈

L2. The exponentiation operation is defined in a classical way,

as well. L0={λ} by definition, L1=L,

and Li=Li-1· L,

for each integer i ≥ 2.

The language L 0 contains exactly one word, the empty word. The empty language does not contain any words,

Le=∅, and L 0 ≠ Le.

We can also use the Kleene star and

Kleene plus closure for languages.

so L*=L + if and only if λ ∈ L.

so L*=L + if and only if λ ∈ L.

The algebraic approach to formal languages can be useful for readers who prefer the classical mathematical models. The free monoid on an alphabet V is the monoid whose elements are from V *. From this point of view both operations, concatenation, (also called product) - which is not a commutative operation in this case -, and the union operation (also called addition), create a free monoid on set V, because these operations are associative, and they both have an identity element.

Associative:

(L1 · L2) · L3 = L1 · (L2 · L3),

(L1 ∪ L2) ∪ L3 = L1 ∪ (L2 ∪ L3),

where L1, L2, L3 ⊆ V *.

Identity element:

L0 · L1 = L1 · L0 = L1,

Le ∪ L1 = L1 ∪ Le = L1,

where L1 ⊆ V *, L 0= {λ}, Le = ∅.

The equation Le · L1 = L1 · Le = Le also holds for each L1 ⊆ V *.

In this section, the definition of basic rewriting systems in general and a specific one, the Markov algorithm is presented.

Definition 1. A formal system (or rewriting system) is a pair W = ( V, P ), where V is an alphabet and P is a finite subset of the Cartesian product V *× V *. The elements of P are the (rewriting) rules or productions. If ( p, q ) ∈ P, then we usually write it in the form p → q. Let r, s ∈ V *, and we say that s can be directly derived (or derived in one step) from r (formally: r ⇒ s) if there are words p, p', p'', q ∈ V * such that r = p' pp'', s = p' qp'' and p → q ∈ P. The word s can be derived from r (formally: r ⇒ * s) if there is a sequence of words r0, r1, ..., rk ( k ≥ 1 ) such that r0 = r, rk = s and ri ⇒ ri+1 holds for every 0 ≤ i < k. Moreover, we consider the relation r ⇒* r for every word r ∈ V *. (Actually, the relation ⇒* is the reflexive and transitive closure of the relation ⇒.

A rewriting (or derivation) step can be understood as follows: the word s can be obtained from the word r in such a way that in s the right hand side q of a production of P is written instead of the left hand side p of the same production in r.

Example 5. Let W = ( V, P ) with V = { a, b, c, e, l, n, p, r, t } and P = { able → can, r → c, pp → b }. Then applerat ⇒ applecat ⇒ ablecat ⇒ cancat, and thus applerat ⇒* cancat in the system W.

Now, we are going to present a special version of the rewriting systems that is deterministic, and was given by the Russian mathematician A. A. Markov as normal algorithms.

Definition 2. M = ( V, P, H ) is a Markov (normal) algorithm where

V is a finite alphabet,

P is a finite ordered set (list) of productions of the form V* × V *,

H ⊆ P is the set of halting productions.

The execution of the algorithm on input w ∈ V * is as follows:

Find the first production in P such that its left-hand-side p is a subword of w. Let this production be p → q. If there is no such a production, then step 5 applies.

Find the first (leftmost) occurrence of p in w (such that w = p'pp'' and p'p contains the subword p exactly once: as a suffix).

Replace this occurrence of p in w by the right hand side q of the production.

If p → q ∈ H, i.e., the applied production is a halting production, then the algorithm is terminated with the obtained word (string) as an output.

If there are no productions in P that can be applied (none of their left-hand-side is a subword of w), then the algorithm terminates and w is its output.

If a rewriting step is done with a non halting production, then the algorithm iterates (from step 1) for the newly obtained word as w.

We note here that rules of type λ → q also can be applied to insert word q to the beginning of the current (input) word.

The Markov algorithm may be terminated with an output, or may work forever (infinite loop).

Example 6. Let M = ({1,2,3 },{21→ 12, 31→ 13, 32→ 23},{}) be a Markov algorithm. Then, it is executed to the input 1321 as follows:

1321 ⇒ 1312 ⇒ 1132 ⇒ 1123.

Since there are no more applicable productions, it is terminated. Actually, it is ordering the elements of the input word in a non-decreasing way.

Example 7. Let

W=({a,b,c},{aa → bbb, ac→ bab, bc → a},{}).

Let us apply the algorithm W to the input

ababacbbaacaa.

As it can be seen in Animation 1, the output is

ababbabbbbbabbb.

(For better understanding in the Animation the productions of the algorithm is numbered.)

Exercise 1. Execute the Markov algorithm

M = ({ 1, + }, { 1 + → + 1, + + → +, + → λ } ,{ + → λ })

on the following input words:

1 + 1 + 1 + 1,

11 + 11 + 11,

111,

111 + 1 + 11.

Exercise 2. Execute the Markov algorithm M = ({1,×, a,b },

{ × 11 → a × 1, × 1 → a, 1a → a1b, ba → ab, b1 → 1b, a1 → a, ab → b, b → 1 } ,{ })

on the following input words:

1 × 1,

11 × 11,

111 × 1,

111 × 11.

The previous two exercises are examples for Markov algorithms that compute unary addition and unary multiplication, respectively.

It is important to know that this model of the algorithm is universal in the sense that every problem that can be solved in an algorithmic way can be solved by a Markov algorithm as well.

In the next section, other variations of the rewriting systems are shown: the generative grammars, which are particularly highlighted in this book. As we will see, the generative grammars use their productions in a non-deterministic way.

The generative grammar is a universal method to define languages. It was introduced by Noam Chomsky in the 1950s. The formal definition of the generative grammar is the following:

Definition 3. The generative grammar (G) is the following quadruple:

G = ( N, T, S, P )

where

N is the set of the nonterminal symbols, also called variables, (finite nonempty alphabet),

T is the set of the terminal symbols or constants (finite nonempty alphabet),

S is the start symbol, and

P is the set of the production rules.

The following properties hold: N ∩ T = ∅ and S ∈ N.

Let us denote the union of the sets N and T by V ( V = N ∪ T). Then, the form of the production rules is V *N V * → V *.

Informally, we have two disjoint alphabets, the first alphabet contains the so called start symbol, and we also have a set of productions. The productions have two sides, both sides are words over the joint alphabet, and the word on the left hand side must contain a nonterminal symbol. We have a special notation for the production rules. Let the word p be the left hand side of the rule, and the word q be the right hand side of the rule, then we use the p → q ∈ P expression.

Example 8. Let the generative grammar G be G = ( N, T, S, P ), where

N = {S,A}, T = {a,b}, and P = { S → bAbS, bAb → bSab, A → λ, S → aa }.

In order to understand the operation of the generative grammar, we have to describe how we use the production rules to generate words. First of all, we should give the definition of the derivation.

Definition 4. Let G = ( N, T, S, P ) be a generative grammar, and let p and q be words over the joint alphabet V = N ∪ T. We say that the word q can be derived in one step from the word p, if p can be written in a form p = uxw, q can be written in a form q = uyw, and x → y ∈ P. (Denoted by p ⇒ q.) The word q can be derived from the word p, if q = p or there are words r1, r2,..., rn such that r1 = p, rn = q and the word ri can be derived in one step from the word ri-1, for each 2 ≤ i ≤ n. (Denoted by p ⇒* q.)

Now, that we have a formal definition of the derivation, let us see an example for deeper understanding.

Example 9. Let the generative grammar G be G = ( N, T, S, P ) , where

N = {S,A}, T = {0,1}, and P = { S → 1, S → 1A, A → AA, A → 0, A → 1 }.

Let the words p,t and q be p = A0S0, t = A01A0, and q = A0110. In this example, the word t can be derived from the word p in one step, (p → t), because p can be written in a form p = uxw, where u = A0, x = S, w = 0, and the word t can be written in a form t = uyw, where y = 1A, and S → 1A ∈ P.

The word q can be derived from the word p, (p ⇒*q), because there exist words r1,r2 and r3 such that r1 = p, r2 = t, r3 = q and r1 ⇒ r2 and r2 ⇒ r3.

Now we have all the definitions to demonstrate how we can use the generative grammar to generate a language.

Definition 5. Let G = ( N, T, S, P ) be a generative grammar. The language generated by the grammar G is

L(G)={p∣p ∈ T*, S ⇒* p}.

The above definition claims that the language generated by the grammar G contains each word over the terminal alphabet which can be derived from the start symbol S.

Example 10. Let the generative grammar G be G = ( N, T, S, P ), where

N = {S,A}, T = {a,b}, and P = { S → bb, S → ASA, A → a }.

In this example, the word bb can be derived from the start symbol S in one step, because S → bb ∈ P, so the word bb is in the generated language, bb ∈ L(G).

The word abba can be derived from the start symbol, because S ⇒ ASA, ASA ⇒ aSA, aSA ⇒ abbA, abbA → abba, so the word abba is also in the generated language, abba ∈ L(G).

The word bab can not be derived from the start symbol, so it is not in the generated language, bab ∉ L(G).

In this case, it is easy to determine the generated language, L(G) = {aibbai∣i ≥ 0}.

Exercise 3. Create a generative grammar G, which generates the language L = {a*b+c*}!

Exercise 4. Create a generative grammar G, which generates the language of all binary numbers!

The Chomsky hierarchy was described first by Noam Chomsky in 1956. It classifies the generative grammars based on the forms of their production rules. The Chomsky hierarchy also classifies languages, based on the classes of generative grammars generating them. The formal definition is provided below.

Definition 6. (Chomsky hierarchy) Let G = ( N, T, S, P ) be a generative grammar.

Type 0 or unrestricted grammars. Each generative grammar is unrestricted.

Type 1 or context-sensitive grammars. G is called context-sensitive, if all of its production rules have a form

p1Ap2 → p1qp2,

or

S → λ,

where p1, p2 ∈ V *, A ∈ N and q ∈ V +. If S → λ ∈ P then S does not appear in the right hand side word of any other rule.

Type 2 or context-free grammars. The grammar G is called context-free, if all of its productions have a form

A → p,

where A ∈ N and p ∈ V *.

Type 3 or regular grammars. G is called regular, if all of its productions have a form

A → r,

or

A → rB,

where A,B ∈ N and r ∈ T *.

Example 11. Let the generative grammar G0 be

G0 = ({S,X},{x,y},S,P) P = { S → SXSy, XS → y, X → SXS, S → yxx }.

This grammar is unrestricted, because the second rule is not a context-sensitive rule.

Example 12. Let the generative grammar G1 be

G1 = ({S,X},{x,y},S,P) P = { S → SXSy, XSy → XyxXy, S → yXy, X → y }.

This grammar is context-sensitive, because each production rule has an appropriate form.

Example 13. Let the generative grammar G2 be

G2 = ({S,X},{x,y},S,P) P = { S → SyS, S → XX, S → yxy, X → ySy, X → λ }.

This grammar is context-free, because the left hand side of each production rule is a nonterminal symbol.

Example 14. Let the generative grammar G be

G = ({S,X},{x,y},S,P) P = { S → xyS, S → X, X → yxS, S → x, X → λ }.

This grammar is regular, because the left hand side of each production rule is a nonterminal, and the right hand side contains at most one nonterminal symbol, in last position.

Exercise 5. What is the type of the following grammars?

1. G = ({S,A},{0,1},S,P) P = { S → 0101A, S → λ, A → 1S, A → 000 }. 2. G = ({S,A,B},{0,1},S,P) P = { S → 0A01B, S → λ, 0A01 → 01A101, A → 0BB1, B → 1A1B, B → 0011, A → 1 }. 3. G = ({S,A,B},{0,1},S,P) P = { S → 0ABS1, S → 10, 0AB → 01SAB, 1SA → 111, A → 0, B → 1 }. 4. G = ({S,A},{0,1},S,P) P = { S → 00A, S → λ, A → λ, A → S1S }.

Definition 7. The language L is regular, if there exists a regular grammar G such that L = L (G).

The same kind of definitions are given for the context-free, context-sensitive, and recursively enumerable languages:

Definition 8. The language L is context-free, if there exists a context-free grammar G such that L = L (G).

Definition 9. The language L is context-sensitive, if there exists a context-sensitive grammar G such that L = L (G).

Definition 10. The language L is called recursively enumerable, if there exists an unrestricted grammar G such that L = L (G).

Although these are the most often described classes of the Chomsky hierarchy, there are also a number of subclasses which are worth investigating. For example, in chapter 3 we introduce the linear languages. A grammar is linear, if it is context-free, and the right hand side of its rules contain maximum one nonterminal symbol. The class of linear languages is a proper subset of the class of context-free languages, and it is a proper superset of the regular language class. Example 15. shows a typical linear language and a typical linear grammar.

Example 15. Let L be the language of the palindromes over the alphabet T = {a,b}. (Palindromes are words that read the same backward or forward.) Language L is generated by the following linear grammar.

G = ({S},{a,b},S,P) P = { S → aSa, S → bSb, S → a, S → b, S → λ }.

Language L is linear, because it can be generated by the linear grammar G.

Example 16. In Example 9 we can see a context-free grammar, which is not linear, because the production A → AA is not a linear rule. However, this context-free grammar generates a regular language, because the same language can be generated by the following regular grammar.

G = ({S,A},{0,1},S,P, and P = { S → 1A, A → 1A, A → 0A, A → λ }.

It is obvious that context-sensitive grammars are unrestricted as well, because each generative grammar is unrestricted. It is also obvious that regular grammars are context-free as well, because in regular grammars the left hand side of each rule is a nonterminal, which is the only condition to be satisfied for a grammar in order to be context-free.

Let us investigate the case of the context-free and context sensitive grammars.

Example 17. Let the grammar G be

G = ({S,A},{x,y},S,P) P = { S → AxA, A → SyS, A → λ, S → λ }.

This grammar is context-free, because the left hand side of each rule contains one nonterminal. At the same time, this grammar is not context-sensitive, because

the rule A → λ is not allowed in context-sensitive grammars,

if S → λ ∈ P, then the rule A → SyS is not allowed.

This example shows that there are context-free grammars which are not context-sensitive. Although this statement holds for grammars, we can show that in the case of languages the Chomsky hierarchy is a real hierarchy, because each context-free language is context-sensitive as well. To prove this statement, let us consider the following theorem.

Theorem 1. For each context-free grammar G we can give context-free grammar G', which is context-sensitive as well, such that L (G) = L (G').

Proof. We give a constructive proof of this theorem. We are going to show the necessary steps to receive an equivalent context-sensitive grammar G' for a context-free grammar G = ( N, T, S, P ).

First, we have to collect each nonterminal symbol from which the empty word can be derived. To do this, let the set U (1) be U (1) = {A∣A ∈ N, A → λ ∈ P}. Then let U (i) = U (i-1) ∪ {A∣A → B1B2...Bn ∈ P, B1, B2,..., Bn ∈ U (i-1)}. Finally, we have an integer i such that U (i) = U (i-1) = U which contains all of the nonterminal symbols from which the empty word can be derived.

Second, we are going to create a new set of rules. The right hand side of these rules should not contain the empty word. Let P' = (P ∪ P1)\{A→ λ∣A ∈ N}. The set P1 contains production rules as well. B → p ∈ P1 if B → q ∈ P and we get p from q by removing some letters contained in set U.

Finally, if S ∉ U, then G' = ( N, T, S, P' ). If set U contains the start symbol, then the empty word can be derived from S, and λ∈ L (G). In this case, we have to add a new start symbol to the set of nonterminals, and we also have to add two new productions to the set P1, and G' = ( N ∪{S'}, T, S', P' ∪ {S' → λ, S' → S}), so G' generates the empty word, and it is context sensitive.

QED.

Example 18. Let the context-free grammar G be

G = ({S,A,B},{x,y},S,P) P = { S → ASxB, S → AA, A → λ, B → SyA }.

Now, we create a context-sensitive generative grammar G', such that L (G') = L (G).

1. U (1) = {A}, U (2) = {A,S} = U. 2. P' = { S → ASxB, S → SxB, S → AxB, S → xB, S → AA, S → A, B → SyA, B → yA, B → Sy, B → y }. 3. G' = ({S,A,B,S'},{x,y},S',P' ∪{S' → λ, S' → S}).

Exercise 6. Create a context-sensitive generative grammar G', such that L (G') = L (G)!

G = ({S,A,B,C},{a,b},S,P) P = { S → aAbB, S → aCCb, C → λ, A → C, B → ACC, A → aSb }.

Exercise 7. Create a context-sensitive generative grammar G', such that L (G') = L (G)!

G = ({S,X,Y},{0,1},S,P) P = { S → λ, S → XXY, X → Y0Y1, Y → 1XS1, X → S00S }.

In the following chapters we are going to investigate the language classes of the Chomsky hierarchy. We will show algorithms to decide if a word is in a language generated by a generative grammar. We will learn methods which help to specify the position of a language in the Chomsky hierarchy, and we are going to investigate the closure properties of the different language classes.

We will introduce numerous kinds of automata. Some of them accept languages, and we can use them as an alternative language definition tool, however, some of them calculate output words from the input word, and we can see them as programmable computational tools. First of all, in the next chapter, we are going to deal with the most simple class of the Chomsky hierarchy, the class of regular languages.

Table of Contents

Summary of the chapter: In this chapter, we deal with the simplest languages of the Chomsky hierarchy, i.e., the regular languages. We show that they can be characterized by regular expressions. Another description of this class of languages can be given by finite automata: both the class of deterministic finite automata and the class of nondeterministic finite automata accept this class. The word problem (parsing) can be solved in real-time for this class by the deterministic finite automata. This class is closed under regular and under set-theoretical operations. This class also has a characterization in terms of analyzing the classes of possible continuations of the words (Myhill-Nerode theorem). We also present Mealy-type and Moore-type transducers: finite transducers are finite automata with output.

In order to be comprehensive we present the definition of regular grammars here again.

Definition (Regular grammars). A grammar G=(N,T,S,P) is regular if each of its productions has one of the following forms: A → u, A → uB, where A,B∈ N, u∈ T*. The languages that can be generated by regular grammars are the regular languages (they are also called type 3 languages of the Chomsky hierarchy).

Animation 2. presents an example for a derivation in a regular grammar.

We note here that the grammars and languages of the definition above are commonly referred to as right-linear grammars and languages, and regular grammars and languages are defined in a more restricted way:

Definition 12. (Alternative definition of regular grammars). A grammar G = ( N, T, S, P ) is regular if each of its productions has one of the following forms: A → a, A → aB, S → λ, where A,B ∈ N, a ∈ T. The languages that can be generated by these grammars are the regular languages.

Now we show that the two definitions are equivalent in the sense that they define the same class of languages.

Theorem 2. The language classes defined by our original definition and by the alternative definition coincide.

Proof. The proof consists of two parts: we need to show that languages generated by grammars of one definition can also be generated by grammars of the other definition.

It is clear the grammars satisfying the alternative definition satisfy our original definition at the same time, therefore, every language that can be generated by the grammars of the alternative definition can also be generated by grammars of the original definition.

Let us consider the other direction. Let a grammar G = ( N, T, S, P ) may contain rules of types A → u and A → uB (A,B ∈ N, u ∈ T*). Then, we give a grammar G' = ( N', T, S', P' ) such that it may only contain rules of the forms A → a, A → aB, S' → λ (where A,B ∈ N', a ∈ T) and it generates the same language as G, i.e., L (G) = L (G').

First we obtain a grammar G'' such that L (G) = L (G'') and G'' = ( N'', T, S, P'' ) may contain only rules of the following forms: A → a, A → aB, A → B, A → λ (where A,B ∈ N'', a ∈ T). Let us check every rule in P: if it has one of the forms above, then we copy it to the set P'', else we will do the following:

if the rule is of the form A → a1... ak for k > 1, where ai ∈ T, i ∈{1,...,k}, then let the new nonterminals X1,..., Xk-1 be introduced (new set for every rule) and added to the set N'' and instead of the actual rule the next set of rules is added to P'': A → a1X1, X1 → a2X2, ..., Xk-2 → ak-1Xk-1, Xk-1 → ak.

if the rule is of the form A → a1... akB for k > 1, where ai ∈ T, i ∈ {1,...,k}, then let the new nonterminals X1,..., Xk-1 be introduced (new set for every rule) and put to the set N'', and instead of the actual rule the next set of rules is added to P'': A → a1X1, X1 → a2X2, ..., Xk-2 → ak-1Xk-1, Xk-1 → akB.

When every rule is analyzed (and possibly replaced by a set of rules) we have grammar G''. It is clear that the set of productions P'' of G'' may contain only rules of the forms A → a, A → aB, A → a, A → λ (where A,B ∈ N'', a∈ T), since we have copied only rules from P that have these forms, and all the rules that are added to the set of productions P'' by replacing a rule are of the forms A → aB, A → a (where A,B ∈ N'', a ∈ T). Moreover, exactly the same words can be derived in G'' and in G. The derivation graphs in the two grammars can be mapped in a bijective way. When a derivation step is applied in G with a rule A → a1... that is not in P'', then the rules must be used in G'' that are used to replace the rule A → a1...: applying the first added rule first A → a1X1, then there is only one rule that can be applied in G'', since there is only one rule added with X1 in its left hand side... therefore, one needs to apply all the rules of the chain that was used to replace the original rule A → a1.... Then, if there is a nonterminal B at the end of the rule A → a1..., then it is located at the end of the last applied rule of the chain, and thus the derivation can be continued in the same way in both grammars. The other way around, if we use a newly introduced rule in the derivation in G'', then we must use all the rules of the chain, and also we can use the original rule that was replaced by this chain of rules in the grammar G. In this way, there is a one-to-one correspondence in the completed derivations of the two grammars. (See Figure 2.1. for an example of replacing a long rule by a sequence of shorter rules.)

Figure 2.1. In derivations the rules with long right hand side are replaced by chains of shorter rules, resulting binary derivation trees in the new grammar.

|

Now we may have some rules in P'' that do not satisfy the alternative definition. The form of these rules can only be A → B and C → λ (where A,B,C ∈ N'', C ≠ S). The latter types of rules can be eliminated by the Empty-word lemma (see Theorem 1.). Therefore, we can assume that we have a grammar G''' = ( N''', T, S', P''' ) such that L (G''') = L (G) and P''' may contain only rules of the forms A → aB, A → B, A → a, S' → λ (where A,B ∈ N''', a∈ T and in case S' → λ ∈ P''' the start symbol S' does not occur on the right hand side of any of the rules of P'''). Let us define the following sets of nonterminals:

let U1 (A) = {A},

let Ui+1 (A) = Ui (A) ∪ {B ∈ N'''∣∃ C ∈ Ui (A) such that C → B ∈ P'''}, for i > 1.

Since N''' is finite there is a minimal index k such that Uk(A) = Uk+1(A). Let U(A) denote the set Uk(A) with the above property. In this way, U(A) contains exactly those nonterminals that can be derived from A by using rules only of the form B → C (where B,C ∈ N'''). We need to replace the parts A ⇒* B → r of the derivations in G''' by a direct derivation step in our new grammar, therefore, let G' = ( N''', T, S', P' ), where P' = {A → r∣∃ B ∈ N''' such that B → r ∈ P''', r ∉ N''' and B ∈ U(A)}. Then G' fulfills the alternative definition, moreover, it generates the same language as G''' and G.

QED.

Further we will call a grammar a regular grammar in normal form if it satisfies our alternative definitions. In these grammars the structures of the rules are more restricted: if we do not derive the empty word, then we can use only rules that have exactly one terminal in their right hand side.

Example 19. Let

G = ({S,A,B},{0,1,2},S,

{ S → 010B,

A → B,

A → 2021,

B → 2A,

B → S,

B → λ

}). Give a grammar that is equivalent to G and is in normal form.

Solution:

Let us exclude first the rules that contain more than one terminal symbols. Such rules are S → 010B and A → 2021. Let us substitute them by the sets

{S → 0X1, X1 → 1X2, X2 → 0B}

and

{A → 2X3, X3 → 0X4, X4 → 2X5, X5 → 1}

of rules, respectively, where the newly introduced nonterminals are {X1, X2} and {X3, X4, X5}, respectively. The obtained grammar is

G'' = ({S, A, B, X1, X2, X3, X4, X5},{0,1,2},S,

{ S → 0X1,

X1 → 1X2,

X2 → 0B,

A → B,

A → 2X3,

X3 → 0X4,

X4 → 2X5,

X5 → 1,

B → 2A,

B → S,

B → λ

).Now, by applying the Empty-word lemma, we can exclude rule B → λ (the empty word can be derived from the nonterminals B and A in our example) and by doing so we obtain grammar

G''' = ({S, A, B, X1, X2, X3, X4, X5}, {0,1,2}, S,

{ S → 0X1,

X1 → 1X2,

X2 → 0B,

X2 → 0,

A → B,

A → 2X3,

X3 → 0X4,

X4 → 2X5,

X5 → 1,

B → 2A,

B → 2,

B → S

}).Now we are excluding the chain rules A → B, B → S. To do this step, first, we obtain the following sets:

| U0 (S) = {S} | U0 (A) = {A} | U0 (B) = {B} | ||

| U1 (S) = {S} = U(S) | U1 (A) = {A,B} | U1 (B) = {B,S} | ||

| U2 (A) = {A,B,S} | U2 (B) = {B,S} = U(B) | |||

| U3 (A) = {A,B,S} = U(A) |

Actually for those nonterminals that are not appearing in chain rules these sets are the trivial sets, e.g., U (X1) = U0 (X1) = {X1}. Thus, finally, we obtain grammar

G' = ({S, A, B, X1, X2, X3, X4, X5}, {0,1,2}, S,

{ S → 0X1,

A → 0X1,

B → 0X1,

X1 → 1X2,

X2 → 0B,

X2 → 0,

A → 2X3,

X3 → 0X4,

X4 → 2X5,

X5 → 1,

B → 2A,

A → 2A,

B → 2,

A → 2

}).Since our transformations preserve the generated language, every obtained grammar (G'', G''' and also G') is equivalent to G. Moreover, G' is in normal form. Thus the problem is solved.

Exercise 8. Let

G = ({S, A, B, C},{a,b},S,

{ S → abA,

S → A,

A → B,

B → abab,

B → aA,

B → aaC,

B → λ,

C → aaS

}). Give a grammar that is equivalent to G and is in normal form.

Exercise 9. Let

G = ({S, A, B, C},{a,b,c},S,

{ S → A,

S → B,

A → aaB,

B → A,

B → acS,

B → C,

C → c,

C → λ

}). Give a grammar that is equivalent to G and is in normal form.

Exercise 10. Let

G = ({S, A, B, C, D},{a,b,c},S,

{ S → aA,

S → bB,

A → B,

B → A,

B → ccccC,

B → acbcB,

C → caacA,

C → cba

}). Give a grammar that is equivalent to G and is in normal form.

Exercise 11. Let

G = ({S, A, B, C, D},{1,2,3,4},S,

{ S → 11A,

S → 12B,

A → B,

B → C,

B → 14,

B → 4431,

C → 3D,

D → 233C

}). Give a grammar that is equivalent to G and is in normal form.

In this section, we will describe the regular languages in another way. First, we define the syntax and the semantics of regular expressions, and then we show that they describe the same class of languages as the class that can be generated by regular grammars.

Definition 13. (Regular expressions). Let T be an alphabet and V = T ∪ {∅, λ, +, ·, *,(,)} be defined as its extension, such that T ∩ {∅, λ, +, ·, *,(,)} = ∅. Then, we define the regular expressions over T as expressions over the alphabet V in an inductive way:

Base of the induction:

∅ is a (basic) regular expression,

λ is a (basic) regular expression,

every a ∈ T is a (basic) regular expression;

Induction steps

if p and r are regular expressions, then (p+r) is also a regular expression,

if p and r are regular expressions, then (p · r) is also a regular expression (we usually eliminate the sign · and write (pr) instead (p · r)),

if r is a regular expression, then r* is also a regular expression.

Further, every regular expression can be obtained from the basic regular expressions using finite number of induction steps.

Definition 14 (Languages described by regular expressions). Let T be an alphabet. Then, we define languages described by the regular expressions over the alphabet T following their inductive definition.

Basic languages:

∅ refers to the empty language {},

λ refers to the language {λ},

for every a ∈ T, the expression refers to the language {a};

Induction steps:

if p and r refer to the languages Lp and Lr, respectively, then the regular expressions (p+r) refers to the language Lp ∪ Lr,

if p and r refer to the languages Lp and Lr, respectively, then the regular expressions (p · r) or (pr) refers to the language Lp · Lr,

if r refers to a language Lr then r* refers to the language Lr*.

The language operations used in the above definition are the regular operations:

the addition (+) is the union of two languages,

the product is the concatenation of two languages, and

the reflexive and transitive closure of concatenation is the (Kleene-star) iteration of languages.

Two regular expressions are equivalent if they describe the same language. Here are some examples:

| (p+r) | ≡ | (r+p) | (commutativity of union) |

| ((p+r)+q) | ≡ | (p+(r+q)) | (associativity of union) |

| (r+∅) | ≡ | r | (additive zero element, unit element for union) |

| ((pr)q) | ≡ | (p(rq) | (associativity of concatenation) |

| (rλ) | ≡ | (λr) ≡ r | (unit element for concatenation) |

| ((p+r)q) | ≡ | ((pq)+(rq)) | (left distributivity) |

| (p(r+q)) | ≡ | ((pr)+(pq)) | (right distributivity) |

| (r∅) | ≡ | ∅ | (zero element for concatenation) |

| λ * | ≡ | λ | (iteration of the unit element) |

| ∅ * | ≡ | λ | (iteration of the zero element) |

| (rr*) | ≡ | (r*r) | (positive iteration) |

Actually, the values of the last equivalence are frequently used, and they are denoted by r+, i.e., r+ ≡ rr* by definition. This type of iteration which does not allow for 0 times iteration, i.e., only positive numbers of iterations are allowed, is usually called Kleene-plus iteration.

With the use of the above equivalences we can write most of the regular expressions in a shorter form: some of the brackets can be eliminated without causing any ambiguity in the language described. The elimination of the brackets is usually based on the associativity (both for the union and for the concatenation). Some further brackets can be eliminated by fixing a precedence order among the regular operations: the unary operation (Kleene-)star is the strongest, then the concatenation (the product), and (as usual) the union (the addition) is the weakest.

We have regular grammars to generate languages and regular expressions to describe languages, but these concepts are not independent. First we will prove one direction of the equivalence between them.

Theorem 3. Every language described by a regular expression can be generated by a regular grammar.

Proof. The proof goes by induction based on the definition of regular expressions. Let r be a basic regular expression, then

if r is ∅, then the empty language can be generated by the regular grammar

(S, A, T, S, {A → a});

if r is λ, then the language {λ} can be generated by the regular grammar

(S, T, S, {S → λ});

if r is a for a terminal a ∈ T, then the language {a} is generated by the regular grammar

(S, T, S, {S → a}).

If r is not a basic regular expression then the following cases may occur:

r is (p+q) with some regular expressions p,q such that the regular grammars Gp = (Np, T, Sp, Pp) and Gq = (Nq, T, Sq, Pq) generate the languages described by expression p and q, respectively, where Np ∩ Nq = ∅ (this can be done by renaming nonterminals of a grammar without affecting the generated language). Then let

G = (Np ∪ Nq ∪ {S}, T, S, Pp ∪ Pq ∪ {S → Sp, S→ Sq}),

where S ∉ Np ∪ Nq is a new symbol. It can be seen that G generates the language described by expression r.

r is (p · q) with some regular expressions p,q such that the regular grammars Gp = (Np, T, Sp, Pp) and Gq = (Nq, T, Sq, Pq) generate the languages described by expression p and q, respectively, where Np ∩ Nq = ∅. Then let

G = (Np ∪ Nq,T, Sp, Pq ∪ {A → uB∣A → uB ∈ Pp, A,B ∈ Np, u ∈ T*} ∪ {A → uSq∣A → u ∈ Pp, A ∈ Np, u ∈ T*}).

It can be shown that G generates the language described by expression r.

r is a regular expression of the form q* for a regular expression q. Further let Gq = (Nq, T, Sq, Pq) be a regular grammar that generates the language described by expression q. Then, let

G = (Nq ∪ {S},T,S, Pq ∪ {S → λ, S → Sq} ∪ {A → uSq∣A → u ∈ Pq, A ∈ Nq, u ∈ T*}),

where S ∉ Nq. It can be shown that G generates the language described by expression r.

Since every regular expression built by finitely many applications of the induction step, for any regular expression one can construct a regular grammar such that the grammar generates the language described by the expression.

QED.

When we want to have a grammar that generates the language given by a regular expression, the process can be faster, if we know that every singleton, i.e., language containing only one word, can easily be obtained by a grammar having only one nonterminal (the start symbol) and only one production that allows to generate the given word in one step.

Example 20. Let r = (cbb)*(ab+ba) be a regular expression (that describes a language over the alphabet {a,b,c}). Give a regular grammar that generates this language.

Solution:

Let us build up r from its subexpressions. According to the above observation, the language {cbb} can be generated by the grammar

Gcbb =({Scbb},{a,b,c},Scbb,{Scbb → cbb}).

Now, let us use our induction step to obtain the grammar G(cbb)* that generates the language (cbb)*: then

G(cbb)* = ({Scbb, S(cbb)*},{a,b,c},S(cbb)*,

{Scbb → ccb, S(cbb)* → λ, S(cbb)* → Scbb, S(cbb) → cbb S(cbb)}).

The languages {ab} and {ba} can be generated by grammars

Gab = ({Sab},{a,b,c},Sab,{Sab → ab})

and

Gab = ({Sba},{a,b,c},Sba,{Sba → ba}),

respectively. Their union, ab+ba, then is generated by the grammar

Gab+ba = ({Sab,Sba,Sab+ba},{a,b,c},Sab+ba,

{Sab → ab, Sba → ba, Sab+ba → Sab, Sab+ba → Sba})

according to our induction step.

Finally, we need the concatenation of the previous expressions (cbb)* and (ab+ba), and it is generated by the grammar

G(cbb*)(ab+ba) = ({Scbb, S(cbb)*,Sab,Sba,Sab+ba}, {a,b,c}, S(cbb)*,

{Scbb → cbbSab+ba, S(cbb)* → Sab+ba, S(cbb)* → Scbb, S(cbb)* → cbb S(cbb)*,

Sab → ab, Sba → ba, Sab+ba → Sab, Sab+ba → Sba})

due to our induction step. The problem is solved.

Exercise 12. Give a regular expression that describes the language containing exactly those words that contain three consecutive a's over the alphabet {a,b}.

Exercise 13. Give a regular expression that describes the language containing exactly those words that do not contain two consecutive a's (over the alphabet {a,b}).

Exercise 14. Give a regular grammar that generates the language 0*(1+22)(2*+00).

Exercise 15. Give a regular grammar that generates the language 0+1(1+0)*.

Exercise 16. Give a regular grammar that generates the language (a+bb(b+(cc)*))*(ababa+c*).

In this section we first define several variations of the finite automata distinguished by the properties of the transition function.

Definition 15 (Finite automata). Let A = ( Q, T, q0, δ, F ). It is a finite automaton (recognizer), where Q is the finite set of (inner) states, T is the input (or tape) alphabet, q0 ∈ Q is the initial state, F ⊆ Q is the set of final (or accepting) states and δ is the transition function as follows.

δ : Q × (T ∪ {λ}) → 2Q (for nondeterministic finite automata with allowed λ-transitions);

δ : Q × T → 2Q (for nondeterministic finite automata without λ-transitions);

δ : Q × T → Q (for deterministic finite automata, d can be partially defined);

δ : Q × T → Q (for completely defined deterministic finite automata (it is not allowed that δ is partial function, it must be completely defined).

One can observe, that the second variation is a special case of the first one (not having λ-transitions). The third variation is a special case of the second one having sets with at most one element as images of the transition function, while the fourth case is more specific allowing sets exactly with one element.

One can imagine a finite automaton as a machine equipped with an input tape. The machine works on a discrete time scale. At every point of time the machine is in one of its states, then it reads the next letter on the tape (the letter under the reading head), or maybe nothing (in the first variations), and then, according to the transition function (depending on the actual state and the letter being read, if any) it goes to a/the next state. It may happen in some variations that there is no transition defined for the actual state and letter, then the machine gets stuck and cannot continue its run.

There are two widely used ways to present automata: by Cayley tables or by graphs. When an automaton is given by a Cayley table, then the 0th line and the 0th column of the table are reserved for the states and for the alphabet, respectively (and it is marked in the 0th element of the 0th row). In some cases it is more convenient to put the states in the 0th row, while in some cases it is a better choice to put the alphabet there. We will look at both possibilities. The initial state should be the first among the states (it is advisable to mark it by a → sign also). The final states should also be marked, they should be circled. The transition function is written into the table: the elements of the set δ(q,a) are written (if any) in the field of the column and row marked by the state q and by the letter a. In the case when λ-transitions are also allowed, then the 0th row or the column (that contains the symbols of the alphabet) should be extended by the empty word (λ) also. Then λ-transitions can also be indicated in the table.

Automata can also be defined in a graphical way: let the vertices (nodes, that are drawn as circles in this case) of a graph represent the states of the automaton (we may write the names of the states into the circles). The initial state is marked by an arrow going to it not from a node. The accepting states are marked by double circles. The labeled arcs (edges) of the graph represent the transitions of the automaton. If p ∈ δ (q,a) for some p,q ∈ Q, a ∈ T ∪ {λ}, then there is an edge from the circle representing state q to the circle representing state p and this edge is labeled by a. (Note that our graph concept is wider here than the usual digraph concept, since it allows multiple edges connecting two states, in most cases these multiple edges are drawn as normal edges having more than 1 labels.)

In this way, implicitly, the alphabet is also given by the graph (only those letters are used in the automaton which appear as labels on the edges).

In order to provide even more clarification, we present an example. We describe the same nondeterministic automaton both by a table and by a graph.

Example 21. Let an automaton be defined by the following Cayley table:

| T Q | → q0 | q1 | q2 | q3 |

|---|---|---|---|---|

| a | q1 | q1 | q2, q3 | - |

| b | q0 | q0 | - | q3 |

| c | q0 | q2 | - | q1,q2,q3 |

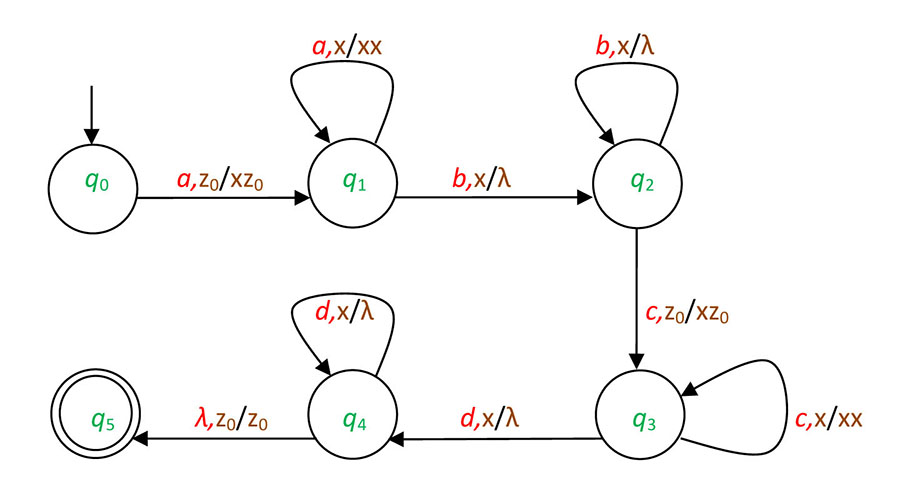

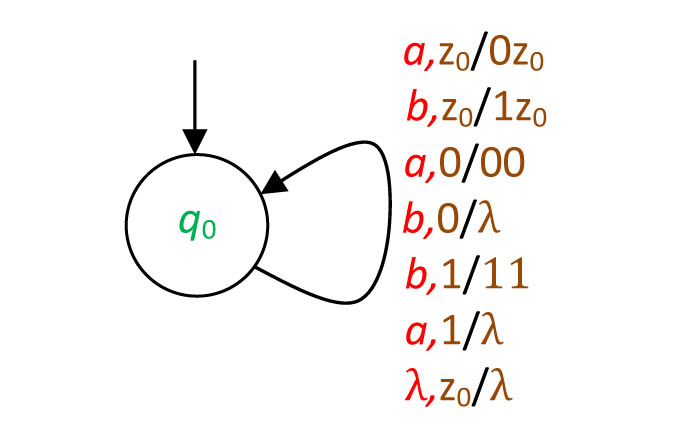

Figure 2.2. shows the graph representation of the same automaton.

These automata are used to accept words, and thus, languages:

Definition 16. (Language accepted by finite automaton). Let A = (Q, T, q0, δ, F) be an automaton and w ∈ T* be an input word. We say that w is accepted by A if there is a run of the automaton, i.e., there is an alternating sequence q0 t1 q1 ... qk-1 tk qk of states and transitions, that starts with the initial state q0, (qi ∈ Q for every i, they are not necessarily distinct, e.g., qi = qj is allowed even if i ≠ j) and for every of its transition ti of the sequence

ti : qi ∈ δ (qi-1,ai) in nondeterministic cases

ti : qi = δ (qi-1,ai) in deterministic cases,

where a1... ak = w, and qk ∈ F. This run is called an accepting run.

All words that A accepts form L(A), the language accepted (or recognized) by the automaton A.

Example 22. Let A be the automaton drawn in the next animations. We show a non-accepting run of a non-deterministic automaton A (with λ-transitions) in Animation 3.

However the word 1100 is accepted by A, since it has also an accepting run that is shown in Animation 4.

These finite automata are also called finite-state acceptors or Rabin-Scott automata. Let us see the language class(es) that can be accepted by these automata.

Two automata are equivalent if they accept the same language.

We have defined four types of finite automata and by the definition it seems that the latter ones are more restricted than the former ones. However, it turns out that all four versions characterize the same language class:

Theorem 4. For every finite automaton there is an equivalent (completely defined) deterministic finite automaton.

Proof. The proof is constructive. Let A = (Q, T, q0, δ, F) be a nondeterministic finite automaton (allowing λ-transitions). Let us define, first, the λ-closure of an arbitrary set q' of states.

let U1 ({q'}) = {q'},

let Ui+1 ({q'}) = Ui (q') ∪ {p ∈ Q∣∃r ∈ Ui (q') such that p ∈ δ (r,λ)}, for i > 1.

Since Q is finite, there is a value k such that Uk (q') = Uk+1 (q'), let us denote this set by U(q'). Practically, this set contains all the states that can be reached starting from a state of q' by only λ-transitions.

Now we are ready to construct the automaton A' =

(Q', T, U (q0), δ', F'), where Q'

= 2Q, F' ⊂ Q' includes every element

q' ∈ Q' such that q' ∩ F ≠ ∅. The transition

function δ' is defined as follows:  for any a ∈ T and q' ∈ Q'.

Actually while this can be done for all subsets of Q, subsets which cannot be reached by transitions from

U (q0) by δ' can be deleted (these

useless states are not needed).

for any a ∈ T and q' ∈ Q'.

Actually while this can be done for all subsets of Q, subsets which cannot be reached by transitions from

U (q0) by δ' can be deleted (these

useless states are not needed).

One can observe that A' is a completely defined deterministic automaton. Also, every run of A has an equivalent run of A', in fact, A' simulates every possible run of A on the input at the same time. Conversely, if A' has an accepting run, then A also has at least one accepting run for the same input. Therefore, A and A' accept the same language, consequently they are equivalent.

QED

Our previous proof gives an algorithm for the ''determinization'' of any finite automaton having only states reachable from the initial state as we will see it in details in Example 23. Note that even if we deleted these useless states, the automaton may not be minimal in the sense that the same language can be accepted by a completely defined deterministic finite automaton having less number of states than our automaton.

Example 23. Let a nondeterministic automaton be defined by the following Cayley table (note that in this algorithm the rows refer to the states of the automaton and the columns to the letters of the alphabet, and in this automaton λ-transitions are allowed):

| Q T | a | b | λ |

|---|---|---|---|

| → q0 | q0,q1 | q2 | - |

| q1 | q1 | - | q2 |

| q2 | q0 | q1 | - |

We start with the λ-closure of the initial state U (q0) = {q0}. This set will count as the initial state of the new automaton: let it be in the first row of the table of this new automaton. Let us see which sets of states can be obtained from this set by using the letters of the alphabet:

by letter a the set {q0, q1} is obtained, however, its λ-closure is {q0, q1, q2};

by letter b the set {q2} is obtained and its λ-closure is {q2}.

Let us write these two sets in the second and third row of the table. Now let us see what sets of states can be reached from these sets. First, let us see the set {q0, q1, q2}.

by letter a the set {q0, q1} is obtained, however, its λ-closure is {q0, q1, q2};

by letter b the set {q1, q2} is obtained and its λ-closure is {q1, q2}.

Since this latter set is not in the table yet, it is added to the fourth row. Now let us see the set {q2}.

by letter a the set {q0} is obtained, and its λ-closure is {q0};

by letter b the set {q1} is obtained and its λ-closure is {q1, q2}.

Since both of these two sets are already in the table we do not need to add a new row. Finally, let us analyse the set {q1,q2} (that is the last row of the table).

by letter a the set {q0, q1} is obtained, and its λ-closure is {q0, q1, q2};

by letter b the set {q1} is obtained and its λ-closure is {q1, q2}.

These sets are in the table. So the table is filled. The initial state of the new deterministic automaton is {q0}. The final states are: {q0, q1, q2}, {q2}, and {q1, q2}. The next table shows the resulting deterministic finite automaton:

| Q T | a | b |

|---|---|---|

| → {q0} | {q0, q1, q2} | {q2} |

| {q0, q1, q2} | {q0, q1, q2} | {q1, q2} |

| {q2} | {q0} | {q1, q2} |

| {q1, q2} | {q0, q1, q2} | {q1, q2} |

Example 24. Animation 5. shows an example how to obtain a completely defined deterministic automaton that is equivalent to the original nondeterministic automaton.

Let A = (Q, T, q0, δ, F) be a deterministic finite automaton such that each of its states is reachable from its initial state (there are no useless states). Then we can construct the minimal deterministic finite automaton that is equivalent to A in the following way:

Let us divide the set of states into two groups obtaining the classification C1 = {F, Q\F}. (We denote the class where state q is by C1[q].)

Then, for i > 1 the classification Ci is obtained from Ci-1: the states p and q are in the same class by Ci if and only if they are in the same class by Ci-1 and for every a ∈ T they behave similarly: δ (p,a) and δ (q,a) are in the same class by Ci.

Set Q is finite and, therefore, there is a classification Cm such that it is the same as Cm+1.

Then, we can define the minimal completely defined deterministic automaton that is equivalent to A: its states are the groups of the classification Cm, the initial state is the group containing the initial state of the original automaton, the final states are those groups that are formed from final states of the original automaton, formally:

(Cm, T, Cm [q0], δCm, FCm),

where δCm(Cm [q], a) = Cm [δ (q,a)] for every Cm [q] ∈ Cm, a ∈ T and FCm = {Cm [q]∣q ∈ F}.

It may happen that there are some words w ∈ T* that are not prefixes of any words of a regular language L. Then, the minimal completely defined deterministic automaton contains a sink state, that is the state where the word w and other words with the same property lead the automaton. When we want to have a minimal deterministic finite automaton for these languages, allowing partial (not completely defined) finite automata, then we may delete this sink state (with the transitions into it) by decreasing the number of the states by one.

Let us see yet another example. When applying the minimization algorithm it is more convenient to put the states to the 0th row of the table and the letters of the alphabet to the 0th column of the table.

Example 25. Let the deterministic automaton A be given as follows:

| T Q | → q0 | q1 | q2 | q3 | q4 | q5 | q6 |

|---|---|---|---|---|---|---|---|

| a | q2 | q5 | q1 | q1 | q2 | q1 | q0 |

| b | q1 | q0 | q3 | q4 | q5 | q3 | q2 |

Give a minimal deterministic automaton that is equivalent to A.

Solution:

Before applying the algorithm we must check which states can be reached from the initial state: from q0 one can reach the states q0, q2, q1, q3, q5, q4. Observe that the automaton cannot enter state q6, therefore, this state (column) is deleted. The task is to minimize the automaton

| T Q | → q0 | q1 | q2 | q3 | q4 | q5 |

|---|---|---|---|---|---|---|

| a | q2 | q5 | q1 | q1 | q2 | q1 |

| b | q1 | q0 | q3 | q4 | q5 | q3 |

by the algorithm. When we perform the first classification of the states C1 = {Q1, Q2} by separating the accepting and non-accepting states: Q1 = {q2, q4, q5}, Q2 = {q0, q1, q3} then we have:

| Q | Q1 | Q2 | |||||

|---|---|---|---|---|---|---|---|

| T | q2 | q4 | q5 | →q0 | q1 | q3 | |

| a | Q2 | Q1 | Q2 | Q1 | Q1 | Q2 | |

| b | Q2 | Q1 | Q2 | Q2 | Q2 | Q1 | |

Then C2 = {Q11, Q12, Q21, Q22} with Q11 = {q2, q5}, Q12 = {q4}, Q21 = {q0, q1}, Q22 = {q3}. Then according to this classification we have

| Q | Q11 | Q12 | Q21 | Q22 | |||

|---|---|---|---|---|---|---|---|

| T | q2 | q5 | q4 | →q0 | q1 | q3 | |

| a | Q21 | Q21 | Q11 | Q11 | Q11 | Q21 | |

| b | Q22 | Q22 | Q11 | Q21 | Q21 | Q12 | |

Since C3 = C2 we have the solution, the minimal deterministic finite automaton equivalent to A:

| T Q | Q11 | Q12 | →Q21 | Q22 |

|---|---|---|---|---|

| a | Q21 | Q11 | Q11 | Q21 |

| b | Q22 | Q11 | Q21 | Q12 |

We conclude this subsection by a set of exercises.

Exercise 17. Give a finite automaton that accepts the language of words that contain the consecutive substring baab over the alphabet {a,b}.

Exercise 18. Let the automaton A be given in a Cayley table as follows:

| T Q | →q0 | q1 | q2 | q3 | q4 |

|---|---|---|---|---|---|

| 0 | q0 | q1 | q2 | q4 | q1 |

| 1 | q1 | q2 | q3 | q3 | q4 |

Draw the graph of A. What is the type of A (e.g., nondeterministic with allowed λ-transitions)?

Exercise 19. The graph of the automaton A is given in Figure 2.3.

Describe A by utilizing a Cayley table. What is the type of A (e.g., deterministic with partially defined transition function)? What is the language that A recognizes?

Exercise 20. Let a nondeterministic automaton with λ-transitions, A, be defined by the following Cayley table:

| Q T | a | b | c | λ |

|---|---|---|---|---|

| →q0 | q0, q3 | q1 | - | - |

| q1 | q1 | q3 | - | q2 |

| q2 | - | q0 | q2 | - |

| q3 | q1 | q1 | q2 | q0 |

Give a completely defined deterministic automaton that is equivalent to A.

Exercise 21. Let a nondeterministic automaton A be defined by the following table:

| Q T | 0 | 1 |

|---|---|---|

| →q0 | q0, q1 | q1 |

| q1 | q1 | q2, q3 |

| q2 | q0 | - |

| q3 | q3 | q0, q1, q2 |

Give a completely defined deterministic automaton that accepts the same language as A.

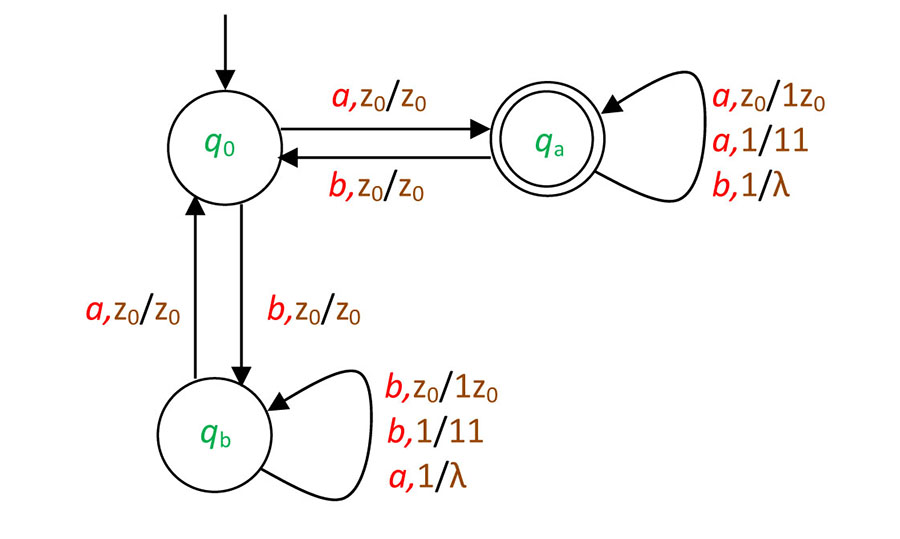

Exercise 22. Let a nondeterministic automaton A be defined by the graph shown in Figure 2.4.

Give a completely defined deterministic automaton that accepts the same language as A.

Exercise 23. Let the deterministic automaton A be given as follows:

| T Q | →q0 | q1 | q2 | q3 | q4 | q5 | q6 | q7 |

|---|---|---|---|---|---|---|---|---|

| a | q6 | q3 | q1 | q7 | q1 | q0 | q4 | q1 |

| b | q4 | q7 | q1 | q2 | q3 | q1 | q5 | q6 |

| c | q5 | q2 | q6 | q1 | q3 | q6 | q0 | q6 |

Give a minimal deterministic automaton that is equivalent to A.

Exercise 24. Let the deterministic automaton A be given by the following table.

| T Q | →q0 | q1 | q2 | q3 | q4 | q5 | q6 | q7 | q8 |

|---|---|---|---|---|---|---|---|---|---|

| 0 | q3 | q5 | q7 | q0 | q2 | q0 | q4 | q2 | q5 |

| 1 | q8 | q1 | q3 | q2 | q6 | q7 | q3 | q5 | q2 |

Give a minimal deterministic automaton that is equivalent to A. (Hint: check first which states can be reached from the initial state.)

Exercise 25. Let a nondeterministic automaton A be defined by the graph shown in Figure 2.5.

Give a minimal deterministic automaton that is equivalent to A.

Now we are going to show that exactly the class of regular languages can be described with the help of the finite automata. First we show that every regular language (type 3 language) can be accepted by finite automata.

Theorem 5. Every language generated by a regular grammar is accepted by a finite automaton.

Proof. The proof is constructive. Let G = (N, T, S, P) be a regular grammar in normal form. Then, let the finite automaton

A = (Q, T, q0, δ,F)

be defined as follows:

let Q = N ∪ {F'} (where F' ∉ N),

q0 = S.

Let the transition function δ be defined by the elements of P: let B ∈ δ (A,a) if A → aB ∈ P; and let F' ∈ δ (A,a) if A → a ∈ P. Further, let the set of accepting states be {F'} if S →λ is not in P and let F = {F',S} if S → λ ∈ P.

One can see that the successful derivations in the grammar and the accepting runs of the automaton have a one-to-one correspondence.

QED.

G = ({S, A, B, C}, {a, b, c}, S,

{ S → abbA,

S → baaB,

S → λ,

A → aS,

A → aC,

B → bC,

C → aS,

C → cc

}) generating the language L(G). Give a finite automaton that accepts the language L(G).

Solution:

First we must exclude the rules containing more than 1 terminals, as we did in the proof of Theorem 2. In this way the grammar

G' =({S, A, B, C, X1, X2, X3, X4, X5}, {a, b, c}, S,

{ S → aX1,

X1 → bX2,

X2 → bA,

S → bX3,

X3 → aX4,

X4 → aB,

S → λ,

A → aS,

A → aC,

B → bC,

C → aS,

C → cX5,

X5 → c

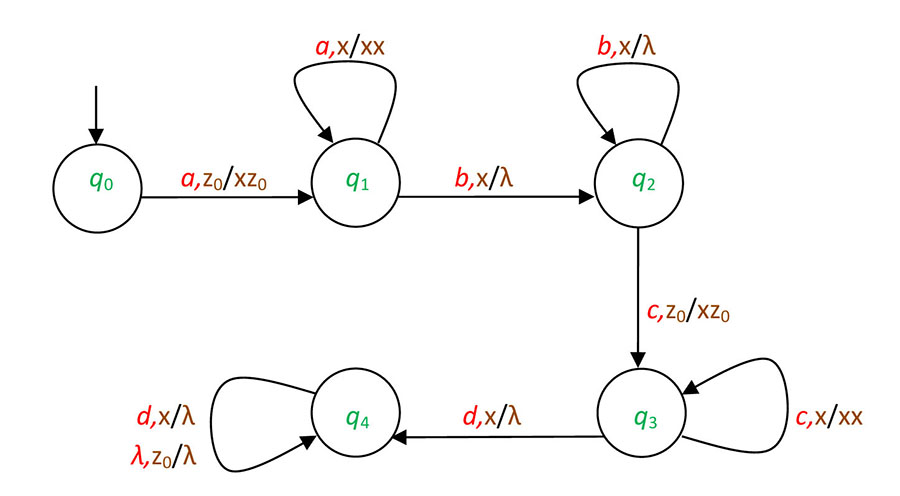

}) can be obtained. Then, we can draw the graph of automaton A as it can be seen in Figure 2.6.

Now we are going to show that the class of finite automata cannot accept more languages than the class that can be described by regular expressions.

Theorem 6. Every language accepted by a finite automaton can be described by a regular expression.

Proof. The proof is constructive. We present an algorithm that shows how one can construct a regular

expression from a finite automaton. We can restrict ourselves to deterministic finite automaton, since we have already seen that they

are equivalent to the nondeterministic finite automata. Let the states of a deterministic finite automaton be 1,2,... for the sake of simplicity, i.e.,

let A = ({1,... n}, T, 1, δ, F) be given.

Let  denote the regular expression that describes the language accepted by the automaton

({1,...,k} ∪ {i,j}, T, i, δ', {j}),

where δ' is the restriction of δ

containing only transitions from the set {i} ∪ {1,...,k} to the set

{1,...,k} ∪ {j} (1 ≤ i,j ≤ n,

0 ≤ k ≤ n and 1,..., 0 means the empty set).

denote the regular expression that describes the language accepted by the automaton

({1,...,k} ∪ {i,j}, T, i, δ', {j}),

where δ' is the restriction of δ

containing only transitions from the set {i} ∪ {1,...,k} to the set

{1,...,k} ∪ {j} (1 ≤ i,j ≤ n,

0 ≤ k ≤ n and 1,..., 0 means the empty set).

Then,  describes the regular language that is given by direct transition(s) from state

i to j.

Therefore,

describes the regular language that is given by direct transition(s) from state

i to j.

Therefore,  gives the language {λ} ∪ {a∣δ (i,a) = i}

(this language contains the empty word and possibly some one-letter-long words, i.e., it is

described by a basic regular expression or

finite union of basic regular expressions).

gives the language {λ} ∪ {a∣δ (i,a) = i}

(this language contains the empty word and possibly some one-letter-long words, i.e., it is

described by a basic regular expression or

finite union of basic regular expressions).

Further,  describes the language {a∣δ (i,a) = j}

if i ≠ j (it can contain some one-letter-long words).

These regular expressions can easily be obtained and proven to describe the languages mentioned.

describes the language {a∣δ (i,a) = j}

if i ≠ j (it can contain some one-letter-long words).

These regular expressions can easily be obtained and proven to describe the languages mentioned.

Now we use induction on the upper index:

for 1 ≤ k ≤ n.

This expression can be seen intuitively: starting from state i we could reach state j by using

the first k states in our path, either without using state k (the part  refers

to this case) or by a path reaching state

refers

to this case) or by a path reaching state  , and then reaching it arbitrarily

many times (including 0 times:

, and then reaching it arbitrarily

many times (including 0 times:  , and finally reaching state j from state

, and finally reaching state j from state

Finally,  gives a regular expression that describes exactly the

language accepted by A.

In this way it is constructively proven that for any finite automaton one can construct a regular

expression that describes the

language accepted by the automaton.

gives a regular expression that describes exactly the

language accepted by A.

In this way it is constructively proven that for any finite automaton one can construct a regular

expression that describes the

language accepted by the automaton.

QED.

Example 27. Let the Cayley table of automaton A be given as follows:

| T Q | →q1 | q2 | q3 |

|---|---|---|---|

| a | q2 | q3 | q1 |

| b | q3 | q2 | - |

Describe the accepted language L(A) by a regular expression.

Solution:

Let us describe the regular expressions  for i,j ∈ {1,2,3} by using the transitions of A.

for i,j ∈ {1,2,3} by using the transitions of A.

|  |  |

|  |  |

|  |  |

Now by using the inductive step we compute  for i,j ∈ {1,2,3}.

for i,j ∈ {1,2,3}.

Now we can continue by computing the expressions

To describe L(A) we need  Let us compute it:

Let us compute it:

Thus, the regular expression

(ab*a + b)(aab*a + ab)* aab* + ab* + (ab*a + b)(aab*a + ab)* = (ab*a + b)(aab*a + ab)* (λ + aab*) + aab*

describes L(A). The problem is solved.

Now we have proven that the three concepts we have discussed in this chapter, the regular grammars, regular expressions and finite automata are equivalent in the sense that each of them characterize exactly the class of regular languages (see Figure 2.7).

Figure 2.7. The equivalence of the three types of descriptions (type-3 grammars, regular expressions and finite automata) of the regular languages.

|

The aim of the analysis of a finite automaton is the task to describe the accepted language in another way, usually, by regular expressions. The synthesis of a finite automaton is the construction of the automaton that accepts a regular language that is usually given by a regular expression. Kleene has proven the equivalence of finite automata and regular expressions.

The minimization algorithm is very important, since the minimal, completely defined, deterministic finite automaton is (the only known) unique identification of a regular language. In this way, we can decide if two regular languages (given by regular expressions, grammars or automata) coincide or not.

We close this subsection by a set of exercises.

Exercise 26. Let

G = ({S, A, B}, {0,1}, S,

{ S → 000A,

S → 111B,

A → λ,

A → 0S,

A → 11,

B → 1S,

B → 000

}) generating language L(G). Give a finite automaton that accepts language L(G). (Hint: first transform the grammar to normal form.)

Exercise 27. Let

G = ({S, A, B, C}, {a,b}, S,

{ S → aaaA,

S → bbB,

S → C,

A → S,

A → baB,

A → ba,

B → S,

B → C,

B → b,

B → λ,

C → B,

C → aA

}) generating language L(G). Give a finite automaton that accepts language L(G).

Exercise 28. Let

G = ({S, A, B}, {a, b, c}, S,

{ S → abA,

S → bccS,

A → bS,

A → c,

A → B,

B → S,

B → aA,

B → bcc,

B → λ

}) generating language L(G). Give a finite automaton that accepts language L(G).

Exercise 29. Let the automaton A be defined by the following table:

| T Q | →q1 | q2 | q3 |

|---|---|---|---|

| 0 | q1 | q3 | q3 |

| 1 | q2 | q2 | q1 |

Give a regular expression that describes the language accepted by automaton A.

Exercise 30. Let automaton A, accepting language L(A), be defined by the Cayley table:

| T Q | →q1 | q2 | q3 |

|---|---|---|---|

| a | q1 | q3 | - |

| b | - | q2 | q1 |

| c | q2 | q2 | - |

Give a regular expression that describes language L(A).

Exercise 31. Let automaton A be as it is shown in Figure 2.8. Give a regular expression that defines the same language as A.

The word problem is to decide whether any given word w belongs to a given regular language (or not). This can be done very efficiently by using a deterministic finite automaton that accepts the given language. (Such an automaton can be constructed from a regular expression, first by Theorem 3. and then/or from a grammar by Theorem 5. and then/or from a nondeterministic finite automaton by Theorem 4.) Reading the word w can be done by ∣w∣ steps, and then, if the automaton accepts w, i.e., it arrived at an accepting state in this run, then w is an element of the language. Otherwise, (if there were some undefined transitions and the automaton gets stuck, or by reading the word finally a non accepting state is reached) w does not belong to the given regular language. The decision on an input word of length n is done in at most n steps, therefore, this is a real time algorithm (i.e., linear time algorithm with coefficient 1). There is no faster algorithm that can read the input, so the word problem for regular languages can be solved very efficiently.

Exercise 32. Let automaton A be given as it can be seen in Figure 2.9.

Decide whether the words

abab,

baba,

aaaabbb,

bbbaaaab,

baabaabaabb and

aaaabbbababaaa are in L(A).

Exercise 33. Let the nondeterministic automaton A be defined by the Cayley table:

| T Q | 0 | 1 | λ |

|---|---|---|---|

| →q0 | q1, q4 | q5 | q2 |

| q1 | q3 | q1, q4 | - |

| q2 | q1 | q2 | - |

| q3 | - | - | q2 |

| q4 | - | q4 | - |

| q5 | q5 | q5 | q0 |

Decide which of the words 0011, 0101, 0110, 01110010, 100, 1011110 belong to the accepted language of A.

Exercise 34. Let

G = ({S, A, B}, {a, b, c}, S,

{ S → abcS,

S → bcA,

S → acB,

A → aS,

A → a,

B → bS,

B → bcc,

S → ccc

}) be a regular grammar. Decide which of the following words can be generated by G:

abcccc,

acbcc,

acbbacbccc,

bca,

bcacacc,

bcaabcacbcc,

ccc,

cccacbc.

Exercise 35. Given the regular language

a* + (a+b)*baba (a+b)*),

decide if the following words are in the described language or not:

aaaaa,

aaabaa,

ababa,

abbabaaba.

In the next part of this section we concentrate on the closure properties of the class of regular languages.

By the constructive proof of Theorem 3, it is also shown that the class of regular languages is closed under the regular operations. Now let us consider the set theoretical operations: intersection and complement.

Theorem 7. The class of regular languages is closed under intersection and complement.

Proof. The proof is constructive in both cases, and deterministic finite automata are used.

Let us start with the complement. Let a regular language be given by a complete deterministic

finite automaton A = (Q, T, q0, δ, F) that recognizes it.

This automaton has exactly one run for

every word of T*, and accepts a word if this run is finished in an accepting state.

Then  recognize exactly those words that are not accepted

by A, and thus the finite automaton

recognize exactly those words that are not accepted

by A, and thus the finite automaton  accepts the complement of the original regular language.

accepts the complement of the original regular language.

For the intersection, let two regular languages L1 and L2 be given by complete deterministic automata A = (Q, T, q0, δ,F) and A' = (Q', T, q'0, δ', F') that recognize them, respectively. Then, let A∩ = (Q × Q', T, (q0, q'0), δ'', F× F'), with transition function δ'' ((q,q'), a) = (δ(q,a), δ' (q',a)) for every q ∈ Q, q' ∈ Q' and a ∈ T. The states are formed by pairs of the states of the automata A and A'. Thus, A∩ simulates the work of these two automata simultaneously and accepts exactly those words that are accepted by both of these machines. Thus the intersection of the languages L1 and L2 is accepted by a finite automaton, and thus it is also a regular language.

QED.

Example 28. Let the automaton A and A' accept the languages L(A) and L(A'), respectively. Let them be defined in the following way: the table of A as shown below.

| T Q | →q1 | q2 | q3 |

|---|---|---|---|

| a | q3 | q2 | q3 |

| b | q2 | q2 | q3 |

the table of A' as shown below

| T Q' | →q'1 | q'2 |

|---|---|---|

| a | q'1 | q'1 |

| b | q'2 | q'2 |

Give an automaton that accepts the complement of L(A) and an automaton that accepts the intersection of L(A) and L(A'). What are the languages accepted by these automata?

Solution:

Let us take the automaton that accepts the complement of L(A) by interchanging the role of

accepting and non-accepting states in A. Let  be defined by the following Cayley table:

be defined by the following Cayley table:

| T Q | →q1 | q2 | q3 |

|---|---|---|---|

| a | q3 | q2 | q3 |

| b | q2 | q2 | q3 |

Now let us construct A∩ by using the Cartesian product of the sets of states Q and Q'.

| T Q | →(q1, q'1) | (q2, q'1) | (q3, q'1) | (q1, q'2) | (q2, q'2) | (q3, q'2) |

|---|---|---|---|---|---|---|

| a | (q3, q'1) | (q2, q'1) | (q3, q'1) | (q3, q'1) | (q2, q'1) | (q3, q'1) |

| b | (q2, q'2) | (q2, q'2) | (q3, q'2) | (q2, q'2) | (q2, q'2) | (q3, q'2) |

Actually, A accepts the language a(a+b)* (words starting by letter a),

and A' accepts the language (a+b)* b

(words that ends with letter b), the automaton  accepts the language λ + b(a+b)

* (words do not start with letter a over the alphabet {a,b}),

while A∩ accepts the

language a (a+b)* b (words starting with a and ending with b).

accepts the language λ + b(a+b)

* (words do not start with letter a over the alphabet {a,b}),

while A∩ accepts the

language a (a+b)* b (words starting with a and ending with b).

Exercise 36. Let the table of A be

| T Q | →q1 | q2 | q3 |

|---|---|---|---|